In an unmarked workplace constructing in Austin, Texas, two small rooms include a handful of Amazon workers designing two varieties of microchips for coaching and accelerating generative AI. These customized chips, Inferentia and Trainium, supply AWS prospects a substitute for coaching their giant language fashions on Nvidia GPUs, which have been getting troublesome and costly to obtain.

“The complete world would love extra chips for doing generative AI, whether or not that is GPUs or whether or not that is Amazon’s personal chips that we’re designing,” Amazon Internet Companies CEO Adam Selipsky informed CNBC in an interview in June. “I feel that we’re in a greater place than anyone else on Earth to provide the capability that our prospects collectively are going to need.”

But others have acted sooner, and invested extra, to seize enterprise from the generative AI growth. When OpenAI launched ChatGPT in November, Microsoft gained widespread consideration for internet hosting the viral chatbot, and investing a reported $13 billion in OpenAI. It was fast so as to add the generative AI fashions to its personal merchandise, incorporating them into Bing in February.

That very same month, Google launched its personal giant language mannequin, Bard, adopted by a $300 million funding in OpenAI rival Anthropic.

It wasn’t till April that Amazon introduced its circle of relatives of huge language fashions, referred to as Titan, together with a service referred to as Bedrock to assist builders improve software program utilizing generative AI.

“Amazon shouldn’t be used to chasing markets. Amazon is used to creating markets. And I feel for the primary time in a very long time, they’re discovering themselves on the again foot and they’re working to play catch up,” mentioned Chirag Dekate, VP analyst at Gartner.

Meta additionally lately launched its personal LLM, Llama 2. The open-source ChatGPT rival is now out there for individuals to check on Microsoft’s Azure public cloud.

Chips as ‘true differentiation’

In the long term, Dekate mentioned, Amazon’s customized silicon might give it an edge in generative AI.

“I feel the true differentiation is the technical capabilities that they are bringing to bear,” he mentioned. “As a result of guess what? Microsoft doesn’t have Trainium or Inferentia,” he mentioned.

AWS quietly began manufacturing of customized silicon again in 2013 with a chunk of specialised {hardware} referred to as Nitro. It is now the highest-volume AWS chip. Amazon informed CNBC there’s at the very least one in each AWS server, with a complete of greater than 20 million in use.

AWS began manufacturing of customized silicon again in 2013 with this piece of specialised {hardware} referred to as Nitro. Amazon informed CNBC in August that Nitro is now the best quantity AWS chip, with at the very least one in each AWS server and a complete of greater than 20 million in use.

Courtesy Amazon

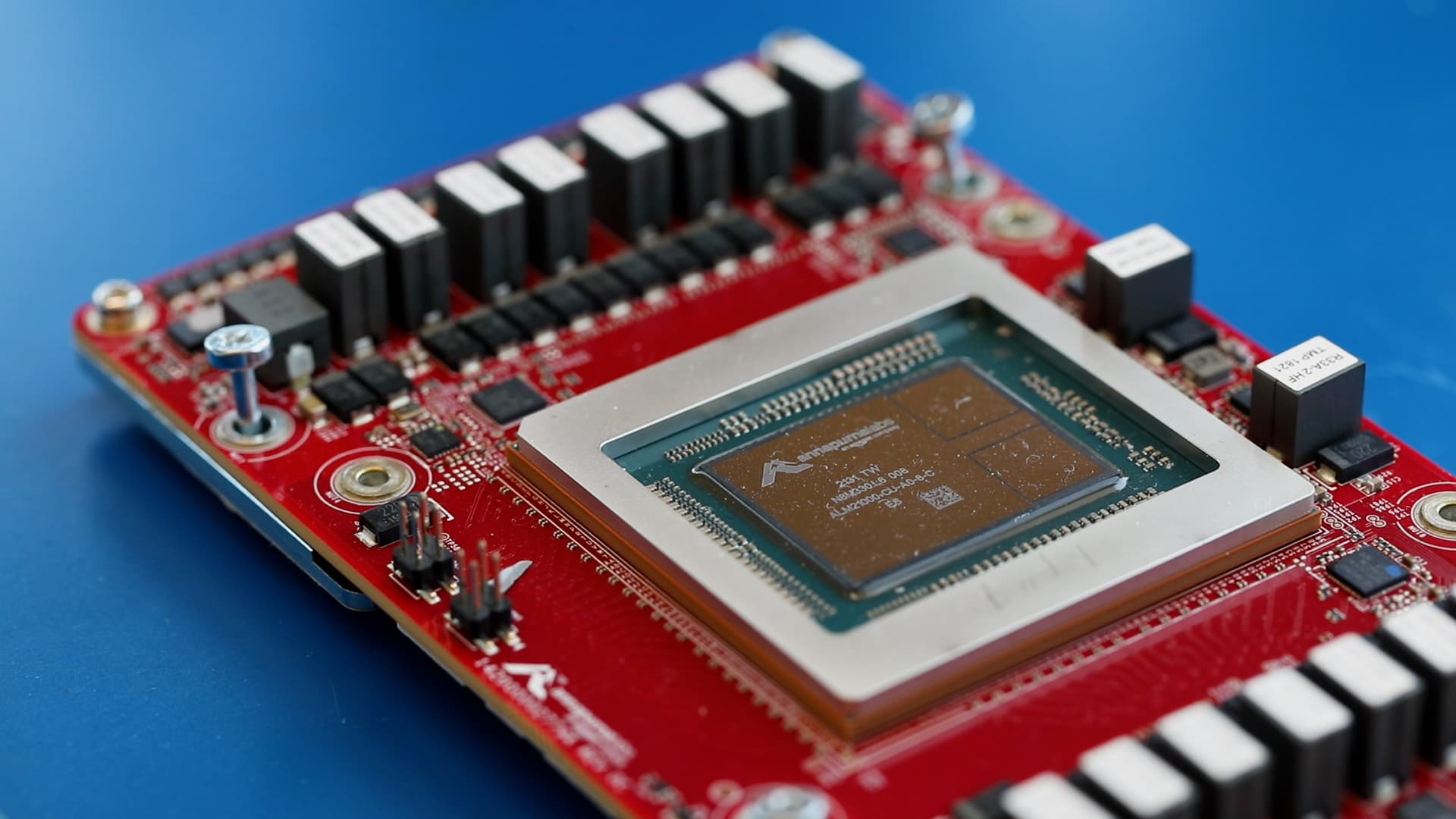

In 2015, Amazon purchased Israeli chip startup Annapurna Labs. Then in 2018, Amazon launched its Arm-based server chip, Graviton, a rival to x86 CPUs from giants like AMD and Intel.

“In all probability excessive single-digit to possibly 10% of whole server gross sales are Arm, and a superb chunk of these are going to be Amazon. So on the CPU aspect, they’ve accomplished fairly nicely,” mentioned Stacy Rasgon, senior analyst at Bernstein Analysis.

Additionally in 2018, Amazon launched its AI-focused chips. That got here two years after Google introduced its first Tensor Processor Unit, or TPU. Microsoft has but to announce the Athena AI chip it has been engaged on, reportedly in partnership with AMD.

CNBC acquired a behind-the-scenes tour of Amazon’s chip lab in Austin, Texas, the place Trainium and Inferentia are developed and examined. VP of product Matt Wooden defined what each chips are for.

“Machine studying breaks down into these two completely different levels. So that you practice the machine studying fashions and then you definately run inference in opposition to these skilled fashions,” Wooden mentioned. “Trainium supplies about 50% enchancment when it comes to value efficiency relative to every other manner of coaching machine studying fashions on AWS.”

Trainium first got here in the marketplace in 2021, following the 2019 launch of Inferentia, which is now on its second technology.

Inferentia permits prospects “to ship very, very low-cost, high-throughput, low-latency, machine studying inference, which is all of the predictions of if you kind in a immediate into your generative AI mannequin, that is the place all that will get processed to provide the response, ” Wooden mentioned.

For now, nonetheless, Nvidia’s GPUs are nonetheless king on the subject of coaching fashions. In July, AWS launched new AI acceleration {hardware} powered by Nvidia H100s.

“Nvidia chips have a large software program ecosystem that is been constructed up round them during the last like 15 years that no person else has,” Rasgon mentioned. “The large winner from AI proper now could be Nvidia.”

Amazon’s customized chips, from left to proper, Inferentia, Trainium and Graviton are proven at Amazon’s Seattle headquarters on July 13, 2023.

Joseph Huerta

Leveraging cloud dominance

AWS’ cloud dominance, nonetheless, is a giant differentiator for Amazon.

“Amazon doesn’t have to win headlines. Amazon already has a very robust cloud set up base. All they should do is to determine easy methods to allow their present prospects to broaden into worth creation motions utilizing generative AI,” Dekate mentioned.

When selecting between Amazon, Google, and Microsoft for generative AI, there are hundreds of thousands of AWS prospects who could also be drawn to Amazon as a result of they’re already aware of it, operating different purposes and storing their knowledge there.

“It is a query of velocity. How rapidly can these corporations transfer to develop these generative AI purposes is pushed by beginning first on the info they’ve in AWS and utilizing compute and machine studying instruments that we offer,” defined Mai-Lan Tomsen Bukovec, VP of expertise at AWS.

AWS is the world’s greatest cloud computing supplier, with 40% of the market share in 2022, in line with expertise business researcher Gartner. Though working revenue has been down year-over-year for 3 quarters in a row, AWS nonetheless accounted for 70% of Amazon’s total $7.7 billion working revenue within the second quarter. AWS’ working margins have traditionally been far wider than these at Google Cloud.

AWS additionally has a rising portfolio of developer instruments targeted on generative AI.

“Let’s rewind the clock even earlier than ChatGPT. It isn’t like after that occurred, all of the sudden we hurried and got here up with a plan as a result of you’ll be able to’t engineer a chip in that fast a time, not to mention you’ll be able to’t construct a Bedrock service in a matter of two to three months,” mentioned Swami Sivasubramanian, AWS’ VP of database, analytics and machine studying.

Bedrock provides AWS prospects entry to giant language fashions made by Anthropic, Stability AI, AI21 Labs and Amazon’s personal Titan.

“We do not imagine that one mannequin goes to rule the world, and we would like our prospects to have the state-of-the-art fashions from a number of suppliers as a result of they’ll choose the appropriate software for the appropriate job,” Sivasubramanian mentioned.

An Amazon worker works on customized AI chips, in a jacket branded with AWS’ chip Inferentia, on the AWS chip lab in Austin, Texas, on July 25, 2023.

Katie Tarasov

Considered one of Amazon’s latest AI choices is AWS HealthScribe, a service unveiled in July to assist medical doctors draft affected person go to summaries utilizing generative AI. Amazon additionally has SageMaker, a machine studying hub that gives algorithms, fashions and extra.

One other huge software is coding companion CodeWhisperer, which Amazon mentioned has enabled builders to finish duties 57% sooner on common. Final yr, Microsoft additionally reported productiveness boosts from its coding companion, GitHub Copilot.

In June, AWS introduced a $100 million generative AI innovation “heart.”

“Now we have so many shoppers who’re saying, ‘I need to do generative AI,’ however they do not essentially know what meaning for them within the context of their very own companies. And so we’ll usher in options architects and engineers and strategists and knowledge scientists to work with them one on one,” AWS CEO Selipsky mentioned.

Though thus far AWS has targeted largely on instruments as a substitute of constructing a competitor to ChatGPT, a lately leaked inner e mail reveals Amazon CEO Andy Jassy is immediately overseeing a brand new central crew constructing out expansive giant language fashions, too.

Within the second-quarter earnings name, Jassy mentioned a “very vital quantity” of AWS enterprise is now pushed by AI and greater than 20 machine studying companies it presents. Some examples of consumers embrace Philips, 3M, Previous Mutual and HSBC.

The explosive progress in AI has include a flurry of safety considerations from corporations frightened that workers are placing proprietary info into the coaching knowledge utilized by public giant language fashions.

“I can not let you know what number of Fortune 500 corporations I’ve talked to who’ve banned ChatGPT. So with our strategy to generative AI and our Bedrock service, something you do, any mannequin you employ by means of Bedrock will probably be in your individual remoted digital non-public cloud setting. It’s going to be encrypted, it will have the identical AWS entry controls,” Selipsky mentioned.

For now, Amazon is simply accelerating its push into generative AI, telling CNBC that “over 100,000” prospects are utilizing machine studying on AWS at the moment. Though that is a small share of AWS’s hundreds of thousands of consumers, analysts say that would change.

“What we’re not seeing is enterprises saying, ‘Oh, wait a minute, Microsoft is so forward in generative AI, let’s simply exit and let’s change our infrastructure methods, migrate every thing to Microsoft.’ Dekate mentioned. “In case you’re already an Amazon buyer, likelihood is you are doubtless going to discover Amazon ecosystems fairly extensively.”

— CNBC’s Jordan Novet contributed to this report.

CORRECTION: This text has been up to date to mirror Inferentia because the chip used for machine studying inference.