Headlines This WeekOpenAI rolled out a number of big updates to ChatGPT this week. Those updates include “eyes, ears, and a voice” (i.e., the chatbot now boasts image recognition, speech-to-text and text-to-speech synthesization capabilities, and Siri-like vocals—so you’re basically talking to the HAL 9000), as well as a new integration that allows users to browse the open internet.At its annual Connect event this week, Meta unleashed a slew of new AI-related features. Say hello to AI-generated stickers. Huzzah. Should you use ChatGPT as a therapist? Probably not. For more information on that, check out this week’s interview.Last but not least: novelists are still suing the shit out of AI companies for stealing all of their copyrighted works and turning them into chatbot food.

Why is Everyone Suing AI Companies? | Future Tech

The Top Story: Chalk One Up for the Good Guys

Image: Elliott Cowand Jr (Shutterstock)

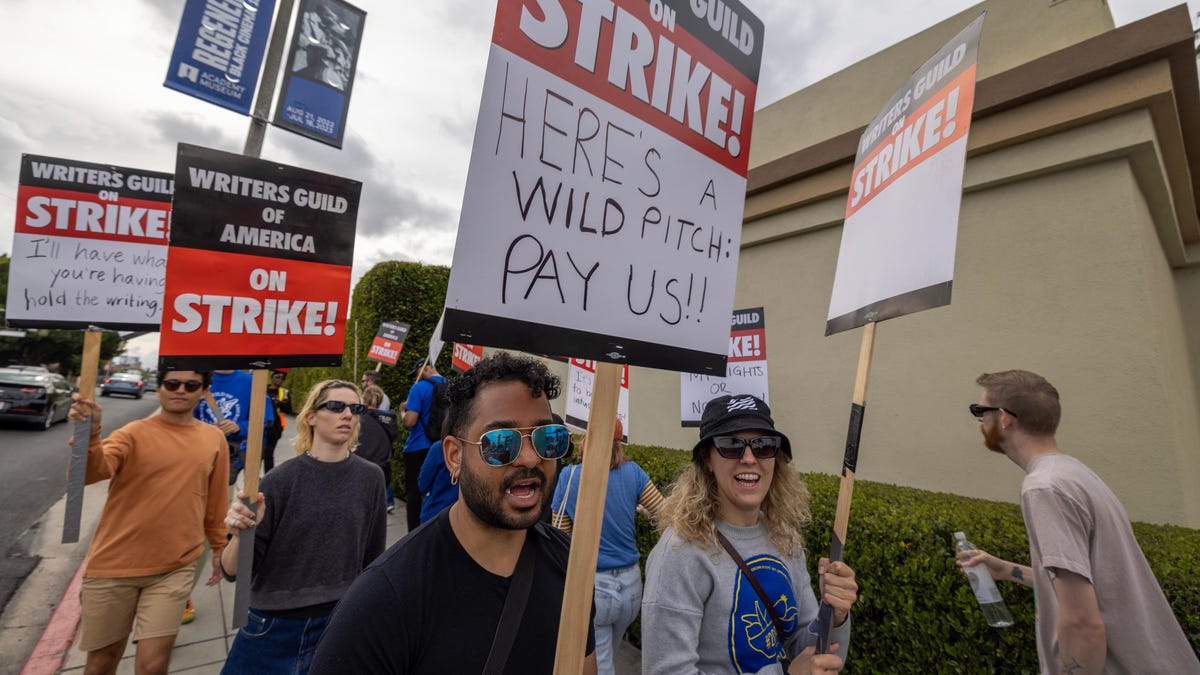

One of the lingering questions that haunted the Hollywood writers’ strike was what sort of protections would (or would not) materialize to protect writers from the threat of AI. Early on, movie and streaming studios made it known that they were excited by the idea that an algorithm could now “write” a screenplay. Why wouldn’t they be? You don’t have to pay a software program. Thus, execs initially refused to make concessions that would’ve clearly defined the screenwriter as a distinctly human role.

Well, now the strike is over. Thankfully, somehow, writers won big protections against the kind of automated displacement they feared. But if it feels like a moment of victory, it could just be the beginning of an ongoing battle between the entertainment industry’s C-suite and its human laborers.

The new WGA contract that emerged from the writer’s strike includes broad protections for the entertainment industry’s laborers. In addition to positive concessions involving residuals and other economic concerns, the contract also definitively outlines protections against displacement via AI. According to the contract, studios won’t be allowed to use AI to write or re-write literary material, and AI generated material will not be considered source material for stories and screenplays, which means that humans will retain sole credit for developing creative works. At the same time, while a writer might choose to use AI while writing, a company cannot force them to use it; finally, companies will have to disclose to writers if any material given to them was generated via AI.

In short: it’s very good news that Hollywood writers have won some protections that clearly outline they won’t be immediately replaced by software just so that studio executives can spare themselves a minor expense. Some commentators are even saying that the writer’s strike has offered everybody a blueprint for how to save everybody’s jobs from the threat of automation. At the same time, it remains clear that the entertainment industry—and many other industries—are still heavily invested in the concept of AI, and will be for the foreseeable future. Workers are going to have to continue to fight to protect their roles in the economy, as companies increasingly look for wage-free, automated shortcuts.

The Interview: Calli Schroeder on Why You Shouldn’t Use a Chatbot for a Therapist

Photo: EPIC

This week we chatted with Calli Schroeder, global privacy counsel at the Electronic Privacy Information Center (EPIC). We wanted to talk to Calli about an incident that took place this week involving OpenAI. Lilian Weng, the company’s head of safety systems, raised more than a few eyebrows when she tweeted that she felt “heard & warm” while talking to ChatGPT. She then tweeted: “Never tried therapy before but this is probably it? Try it especially if you usually just use it as a productivity tool.” People had qualms about this, including Calli, who subsequently posted a thread on Twitter breaking down why a chatbot was a less than optimal therapeutic partner: “Holy fucking shit, do not use ChatGPT as therapy,” Calli tweeted. We just had to know more. This interview has been edited for brevity and clarity.

In your tweets it seemed like you were saying that talking to a chatbot should not really qualify as therapy. I happen to agree with that sentiment but maybe you could clarify why you feel that way. Why is an AI chatbot probably not the best route for someone seeking mental help?

I see this as a real risk for a couple reasons. If you’re trying to use generative AI systems as a therapist, and sharing all this really personal and painful information with the chatbot…all of that information is going into the system and it will eventually be used as training data. So your most personal and private thoughts are being used to train this company’s data set. And it may exist in that dataset forever. You may have no way of ever asking them to delete it. Or, it may not be able to get it removed. You may not know if it’s traceable back to you. There are a lot of reasons that this whole situation is a huge risk.

Besides that, there’s also the fact that these platforms aren’t actually therapists—they’re not even human. So, not only do they not have any duty of care to you, but they also just literally don’t care. They’re not capable of caring. They’re also not liable if they give you bad advice that ends up making things worse for your mental state.

On a personal level, it makes me both worried and sad that people that are in a mental health crisis are reaching out to machines, just so that they can get someone or something will listen to them and show them some empathy. I think that probably speaks to some much deeper problems in our society.

Yeah, it definitely suggests some deficiencies in our healthcare system.

One hundred percent. I wish that everyone had access to good, affordable therapy. I absolutely recognize that these chatbots are filling a gap because our healthcare system has failed people and we don’t have good mental health services. But the problem is that these so-called solutions can actually make things a lot worse for people. Like, if this was just a matter of someone writing in their diary to express their feelings, that’d be one thing. But these chatbots aren’t a neutral forum; they respond to you. And if people are looking for help and those responses are unhelpful, that’s concerning. If it’s exploiting people’s pain and what they’re telling it, that’s a whole separate issue.

Any other concerns you have about AI therapy?

After I tweeted about this there were some people saying, “Well, if people choose to do this, who are you to tell them not to do it?” That’s a valid point. But the concern I have is that, in a lot of cases involving new technology, people aren’t allowed to make informed choices because there’s not a lot of clarity about how the tech works. If people were aware of how these systems are built, of how ChatGPT produces the content that it does, of where the information you feed it goes, how long it’s stored—if you had a really clear idea of all of that and you were still interested in it, then…sure, that’s fine. But, in the context of therapy, there’s still something problematic about it because if you’re reaching out in this way, it’s entirely possible you’re in a distressed mental state where, by definition, you’re not thinking clearly. So it becomes a very complicated question of whether informed consent is a real thing in this context.

Catch up on all of Gizmodo’s AI news here, or see all the latest news here. For daily updates, subscribe to the free Gizmodo newsletter.