YouTube

If you upload videos to YouTube, you will now have to fess up if you used AI to create a realistic-looking video. In a Monday blog post, YouTube said that the Creator Studio tool will display labels in certain areas, requiring you to disclose if the content was made using “synthetic media” (meaning generative AI tools).

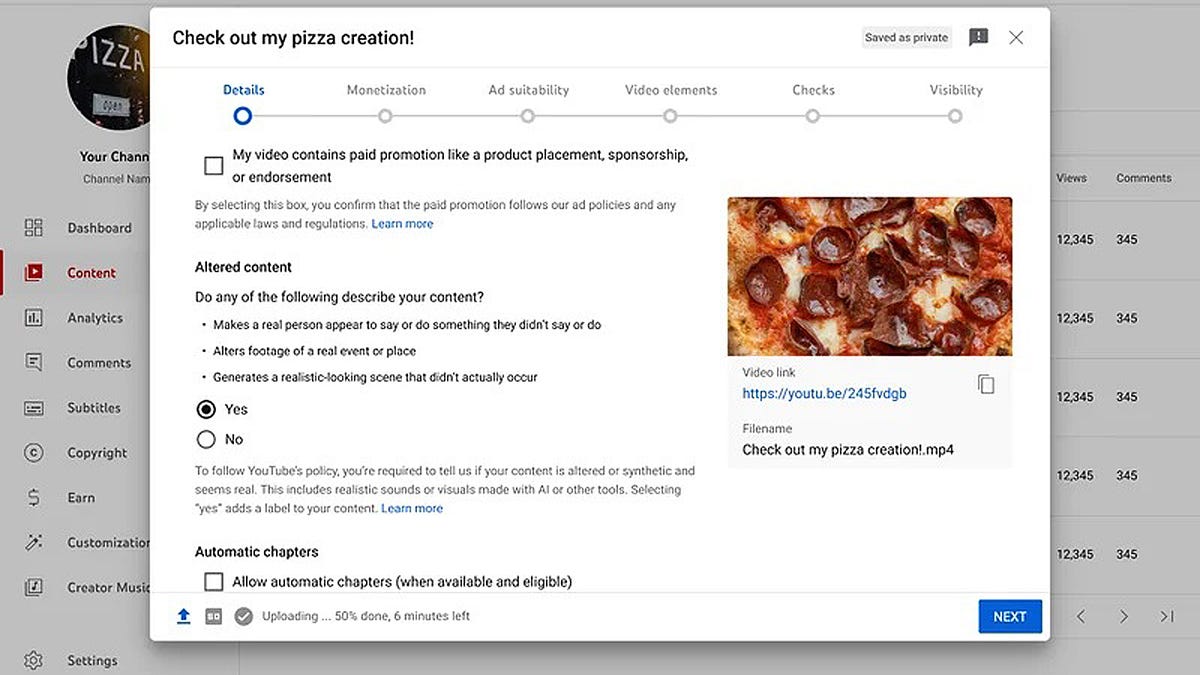

Built into Creator Studio for both YouTube’s website and its mobile apps, the AI confirmation labels will appear in the expanded description or on the video player itself. You’ll then need to check a box or otherwise indicate if the content makes a real person appear to say or do something they didn’t say or do, alters footage of a real event or place, or generates a realistic-looking scene that didn’t actually occur. A label for particularly sensitive content may even appear on the video itself.

Also: YouTube on your TV gets more interactive, and you can shop, too – sort of

First announced in November 2023, the policy applies only to realistic-looking video content that could be misconstrued for real people, places, or events. Some examples cited in the blog include:

Using the likeness of a realistic person. Digitally replacing the face of one person with that of another or artificially creating a person’s voice to narrate a video.Altering footage of real events or places. Making it look like a real building is on fire or changing a real location in a city to make it look different than in reality.Generating realistic scenes. Depicting fictional major events, such as a tornado moving toward a real town.

Using generative AI to create fantastic or unrealistic scenes or to apply special effects would not require a disclosure. Examples of such instances include:

Animating a fantastical worldUsing AI to adjust the color or lighting filtersUsing special effects such as background blur or vintage filtersApplying beauty filters or other visual enhancements

Fake videos on social media and elsewhere have long triggered concerns of people being fooled into thinking that what they see is real. Generative AI has made the process for generating phony videos even easier and thus more prevalent. Worries are only increasing as we head into a US presidential election where fake political videos can be used to influence voters.

Okay, so the new policy sounds reasonable. But what happens if a YouTube creator simply doesn’t comply with the rules?

In the blog post, YouTube said that the team will consider different enforcement measures for users who consistently fail to disclose using AI. In certain cases, YouTube might even add its own label if the creator neglects to do so, especially if the content could confuse or mislead people.

Furthermore, YouTube is aiming to update its privacy policy to help people request that certain AI-generated content be removed. In those situations, individuals should be able to ask to remove any artificial content that mimics their face or voice.