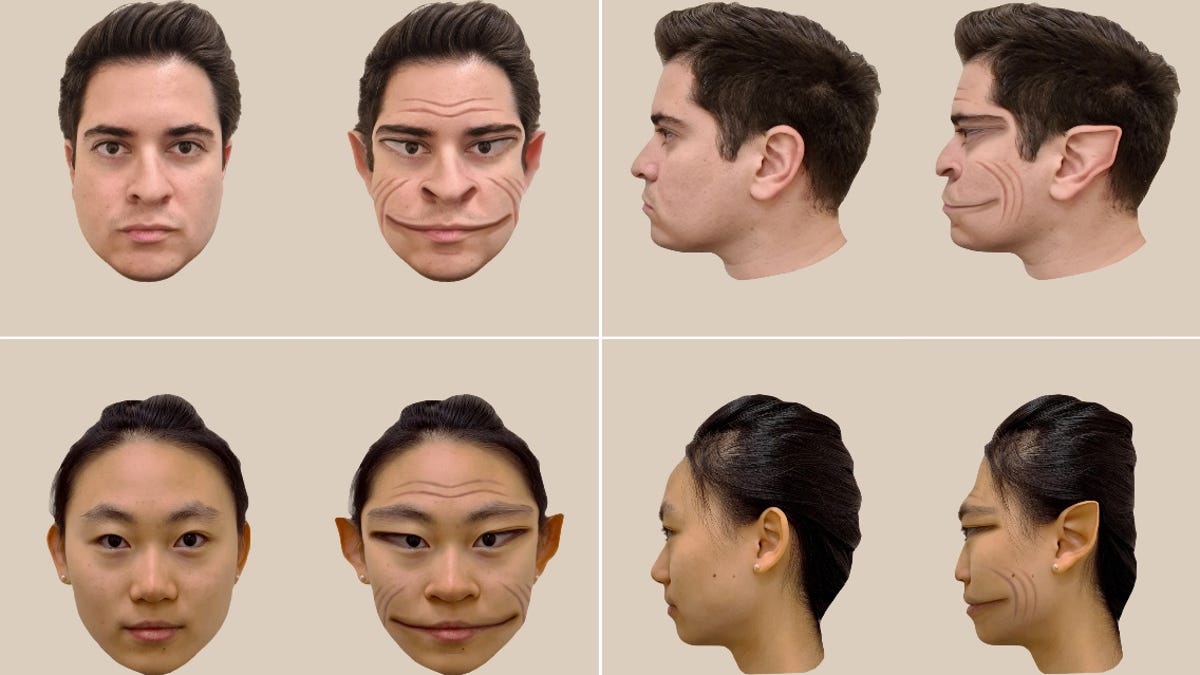

Haunting ‘Demon Faces’ Show What It’s Like to Have Rare Distorted Face Syndrome

A 58-year-old man with a rare medical condition sees faces normally on screens and paper, but in person, they take on a demonic quality. The patient has a unique case of prosopometamorphopsia (PMO), a condition that causes peoples’ faces to appear distorted, reptilian, or otherwise inhuman. The Fujifilm X100VI is the Most Fun I’ve Had … Read more